In a new exhibition at Fondazione Prada, Trevor Paglen and Kate Crawford investigate AI’s political underpinnings.

From finding shapes in the clouds to seeing faces in nature to deciphering Rorschach inkblots, the human brain is a pattern recognition machine. We’re wired to extract meaning from abstract information—and in a landscape of rapid technological advancement, this ability is an integral feature of human intelligence and machine learning alike.

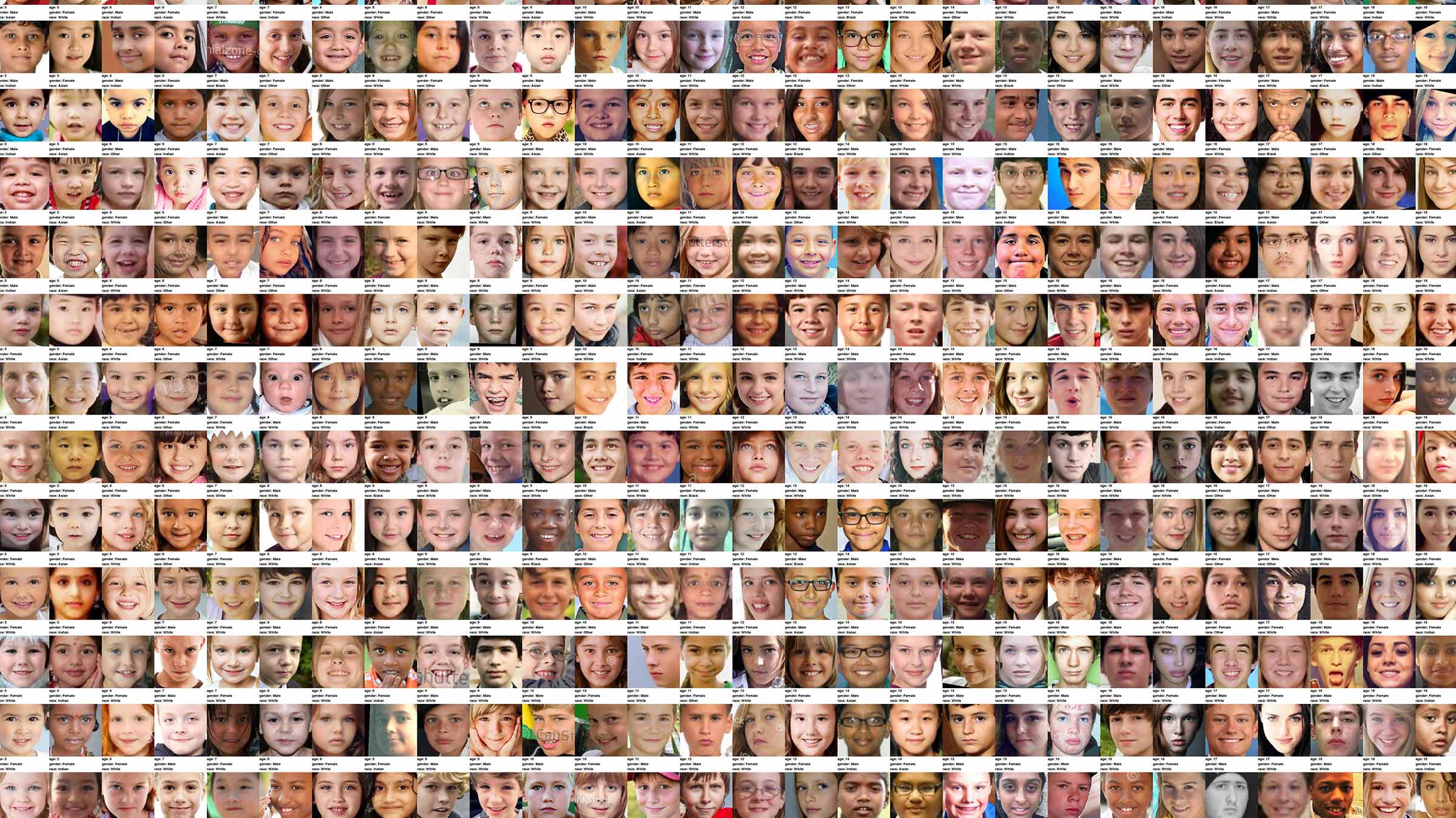

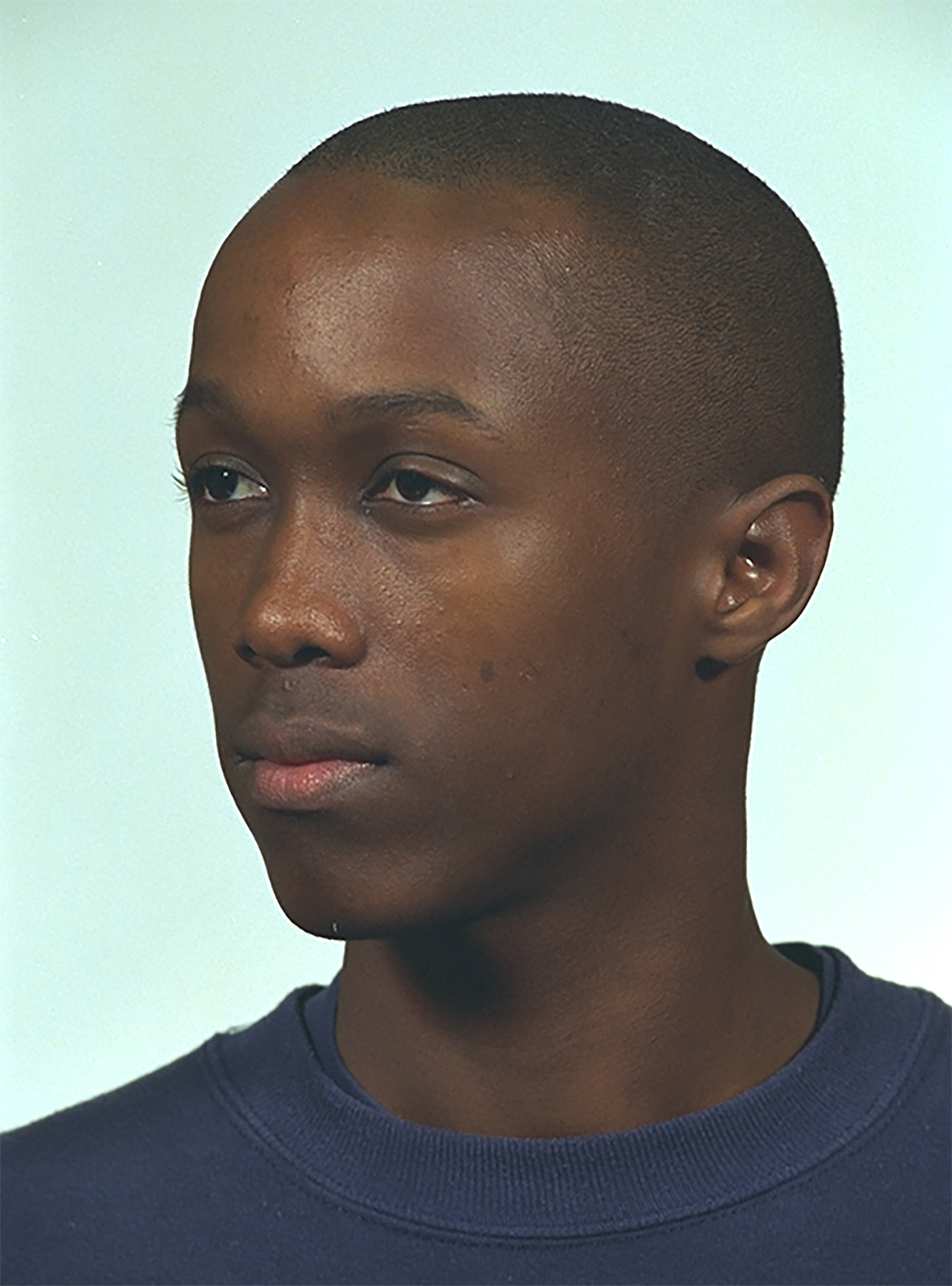

Today, Fondazione Prada will open ‘Training Humans,’ the first major photography exhibition dedicated to training images, the photos used to teach artificial intelligence systems to ‘see’ like people do. Conceived in collaboration by artists Trevor Paglen and Kate Crawford, the exhibition presents a collection of training image sets from the 1960s to the present, investigating how these photos inform the development and status of artificial intelligence. Sourced from multiple data sets, this photography collection reveals the historical evolution of machine vision and offers a rare glimpse at its underpinnings: the secret world of images that weren’t made for human eyes.

Designed to recognize patterns in raw data, ‘deep learning’ or ‘hierarchical learning’ is a subset of machine learning based loosely on the structures of the human brain. Unlike most algorithms, artificial neural networks are able to learn on an unsupervised basis from data that is unlabeled and unstructured. This means that their judgements derive not from direct programming, but from the patterns they detect in large swathes of images (among those included in the show are images from data sets intended to teach AI how to recognize people based on emotion, gait, binary gender, and racial categories).

In today’s digital era, the rapid creation and proliferation of media has given us unprecedented access to images. The advent of social media has brought with it a huge influx of free data, with everything from public mugshots to Myspace pictures becoming available for use without the permission or consent of their subjects—raising questions about how increasingly invasive AI systems harvest and use this material. Yet this invasion of privacy also forms the basis of countless modern conveniences, from Google’s ‘search by image’ to so-called ‘smart albums,’ where the images stored on your phone are automatically sorted according to a series of predetermined themes and categories.

It has long been known that AI programs are prone to inherit the biases of their creators—and because a neural network is only as strong as its data set, the prejudice often imbedded in training data presents a particularly thorny issue for researchers. In 2016, Microsoft’s attempt to create an AI millennial chat bot famously ended in chaos when it started spouting racist, sexist epithets only 24 hours after coming online. A 2018 study on facial analysis software revealed significant gender and skin-type bias embedded in the technology, with images of dark-skinned women mistakenly identified nearly 35% of the time (compared with a less than 1% error rate for light skinned men). In 2018, Amazon—a veritable behemoth of automation—scrapped a secret AI recruiting tool after discovering it demonstrated bias towards women, having learned from the years of gender disparity it was intended to correct. Far from closing the gender pay gap, the AI began downgrading graduates of all-women colleges and penalizing resumes with the word “women’s,” based on patterns from the past 10 years of resumes submitted to the company.

Teaching machines to “see” like people means replicating not only the internal logic of human visual protocol, but inheriting the external power systems that inform our judgements—and to understand the status of today’s artificial intelligence systems, we must look closely at what has been used to train them. As Paglen and Crawford state, “a stark power asymmetry lies at the heart of these tools. What we hope is that ‘Training Humans’ gives us at least a moment to start to look back at these systems and understand, in a more forensic way, how they see and categorize us.”

‘Training Humans’ is open at Fondazione Prada in Milan, Italy from September 19 through February 24, 2019.