From Steve Jobs to Albert Einstein, it’s an appealing notion that brilliant individuals are behind the world’s most significant creative breakthroughs. But is it true?

Some of the most famous minds have exploited the cult of the “lone creative genius.” Signature looks are a classic method: Steve Jobs’s turtlenecks, David Foster Wallace’s bandanas, Tom Wolfe’s white suits, Albert Einstein’s gray suits. So too are exaggerated claims of brilliance: F. Scott Fitzgerald admitted to his biographer Andrew Turnbull that he would lie about how long it took him to write certain stories, once claiming he could crank out a short story in a day when really it took him closer to months. (He thought this claim of impossible talent would help him sell more stories—and it probably did.)

Others have used the idea of the lone creative genius to get away with bad behavior. Pablo Picasso once said, “For me, there are only two kinds of women: goddesses and doormats.” He mostly treated them like the latter; behind his status as a lone creative genius, he was never much questioned about it.

That the label of lone genius tends to be predominantly applied to narcissistic men known to hurt others or break rules is hardly a surprise. A selection bias is at play: The people on the pedestals tend to be the kind of brash, self-centered people who would put themselves there.

It’s a challenging idea to escape because there seems to be a human desire to place ourselves at the foot of creative genius. Whether it’s Jobs, Picasso, or even Kanye, it’s an appealing notion that brilliant individuals—rather than teams of hardworking people with good fortune—are behind the world’s most significant creative breakthroughs. The idea that there are near-Gods of creativity amongst us is a potent one, particularly in a world that feels increasingly unpredictable; if we can’t have the stability of God, at least we might have people who appear to approach something close to it.

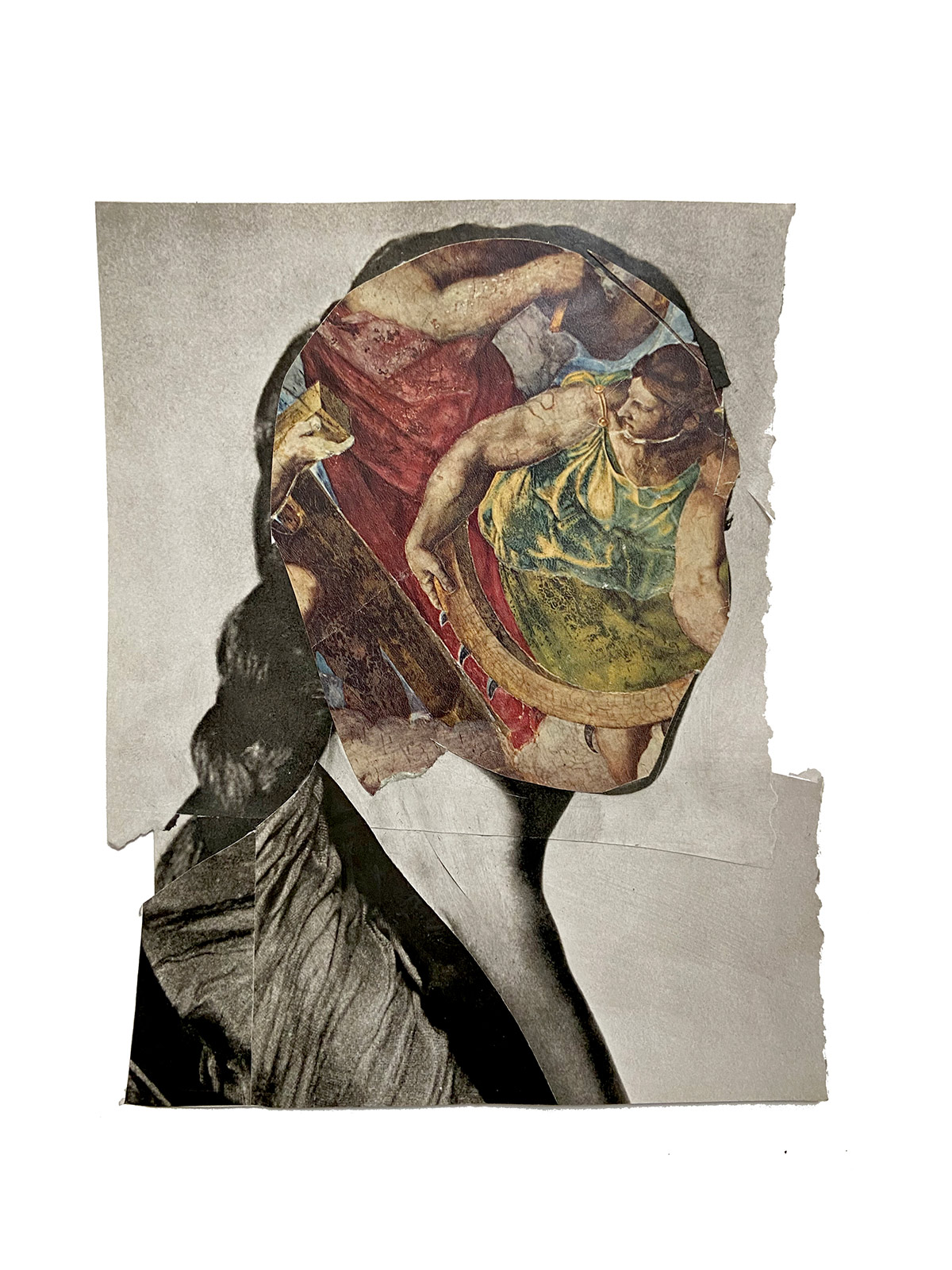

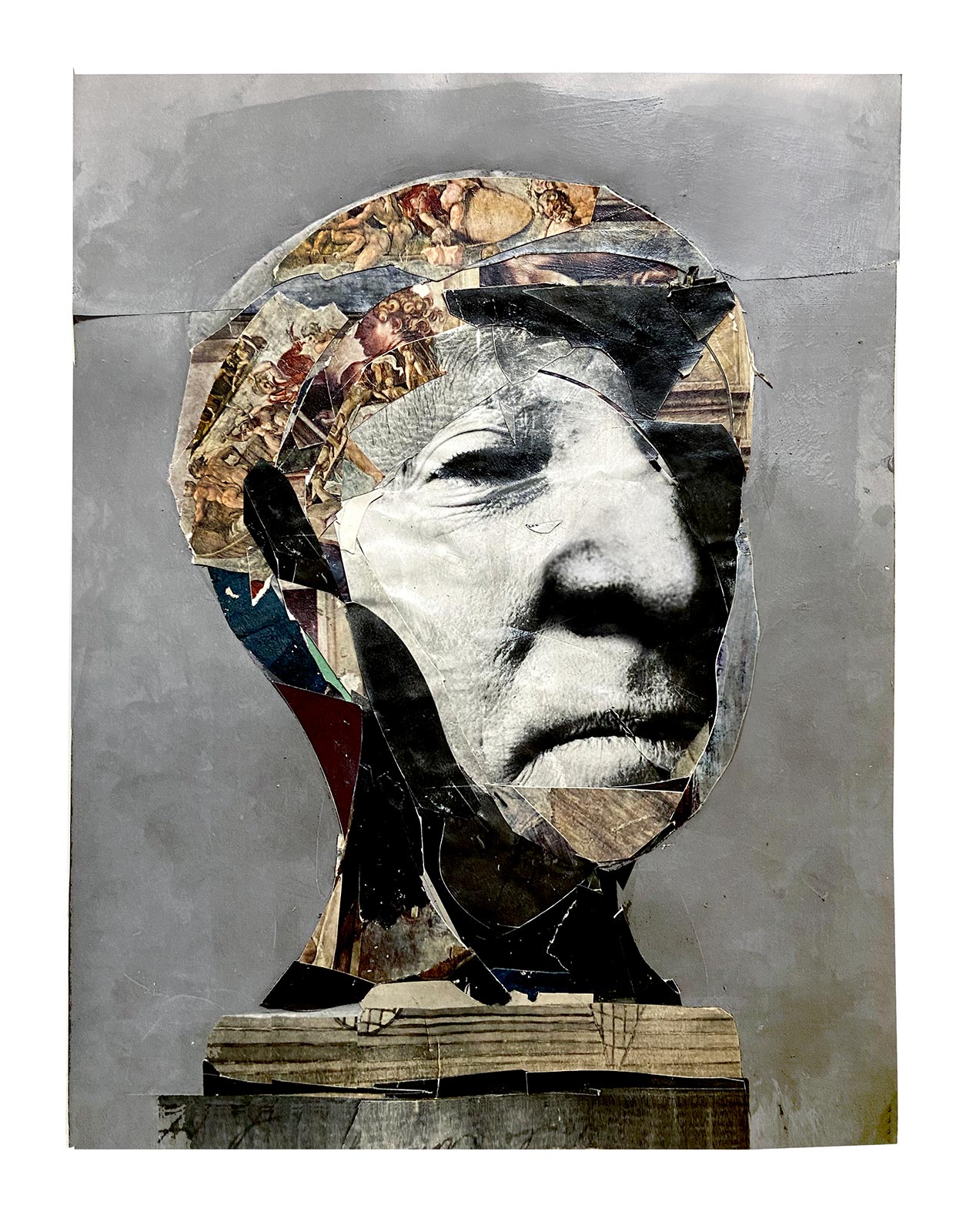

Historically, the lone creative genius wasn’t always so. Prior to the Enlightenment, individuals were rarely referred to as “geniuses.” Rather they had geniuses—as in, supernatural or divine forces that gave them their ideas and creativity. The 16th-century Italian painter and historian Giorgio Vasari once said that his friend Michelangelo was sent to Earth by “the great Ruler of Heaven.” (Today, Michelangelo would probably be invited to the Aspen Ideas Festival to share his tips on “sparking creativity”—tote bags would be made; maybe a branded coffee mug.)

With the Renaissance, however, as Europe moved away from religion and toward humanism, the idea of the lone genius became important in putting man at the center of the world. In 1710, England passed its first significant copyright law for writers, which made authors the sole owner of their works—further placing individuals, rather than any divine inspiration or borrowings from other works, at the heart of the creative act. In 1711, the English essayist Joseph Addison deemed Shakespeare one of “these great natural geniuses,” marking perhaps the first modern use of “genius” as applied to the individual.

“The idea that there are near-Gods of creativity amongst us is a potent one, particularly in a world that feels increasingly unpredictable; if we can’t have the stability of God, at least we might have people who appear to approach something close to it.”

The trouble, of course, is that creative genius is inextricably wrapped up in societal biases. Those with strong educations, socioeconomic support systems, and social-professional connections tend to be overrepresented as geniuses, not because they’re necessarily intrinsically smarter, but because they have more opportunities than their poorer, less-socially-connected kin. That being said, it’s hard to say whether genius is something people are born with and can cultivate or whether creative genius is achieved purely through hard work.

The ancient Greeks tended to believe the former—that creative genius was physical. Black bile in the body created melancholia, i.e., depression and delusion, which in turn gave way to creativity, Aristotle reasoned. In 1790, Immanuel Kant put forth the first formal philosophy that genius is innate. “Genius is the talent (natural endowment)…the innate mental aptitude (ingenium) through which nature gives the rule to art,” he wrote in Critique of Judgement. In 1869, the English polymath Francis Galton set out to mathematically prove Kant’s thesis, studying the families of significant people throughout history. He couldn’t ever quite figure out how to measure “genius” qualities though, eventually claiming in his book Hereditary Genius that he’d proved his hypothesis correct without furnishing much in the way of usable data.

Though Galton’s attempts to prove Kant’s thesis of innate genius were more or less a bust, things began to change in 1916 when Lewis M. Terman, a psychology professor at Stanford University, invented the IQ test. Studying the lives of over 1,500 children with very high IQs (140 or higher), Terman reasoned that most of them would make major contributions to their chosen professional fields as adults. By the time the kids he’d selected were in their 40s, a few had written books and important academic papers. But most of Terman’s 140-plus IQers had lived professionally unexceptional lives. Some of the children Terman had tested who had not met his IQ threshold—but who were still followed in the study—had gone on to achieve huge awards, like winning the Nobel Prize. Terman was dead by the time the results were ready, but his work essentially proved the opposite of his thesis. Childhood IQ is an unreliable test for future success; that is, creative genius is probably not innate.

A more recent theory is that lone geniuses are simply hard workers, notably expressed in the “10,000-hour rule.” (You too can work your way to achieving something as ineffable as creativity!) Within America’s current hyper-individualized, neoliberalized culture, it’s a particularly attractive theory. The problem is that while the theory—popularized by writer Malcolm Gladwell—still gets touted from time to time, it’s since been disproved. The conclusions that form the 10,000-hour rule are based on a misinterpretation of data, says K. Anders Ericsson, the Florida State University psychologist who helped create the original study. “Malcolm Gladwell read our work, and he misinterpreted some of our findings. We [studied] violinists who had been at an international academy [and were] viewed as being on track for international careers,” Ericsson said on a podcast. “When we estimated how many hours they had spent working on trying to improve their performance by themselves, we came up with an average, across the group, of 10,000 hours. But that really meant that there was a fair amount of variability.”

Practicing for 10,000 hours isn’t a guarantee of creative genius any more than to whom you’re born or what your IQ is. In an “Ask Me Anything” interview on Reddit, Gladwell clarified his idea: “Practice isn’t a sufficient condition for success. I could play chess for 100 years and I’ll never be a grandmaster. The point is simply that natural ability requires a huge investment of time in order to be made manifest.”

But too much practice can also have a negative effect: Practicing only one musical instrument or painting technique or writing style, for instance, blocks off the associations and disparate pattern combinations that are the neurological hallmark of creativity.

“Those with strong educations, socioeconomic support systems, and social-professional connections tend to be overrepresented as geniuses, not because they’re necessarily intrinsically smarter, but because they have more opportunities than their poorer, less-socially-connected kin.”

The idea of the lone genius can also skew the truth behind a person’s success. To illustrate this, look no further than how Apple is typically described. Yes, Steve Jobs did a good deal of work and his detail-oriented nature no doubt shaped the company, but too often the work of co-founders Steve Wozniak and Ronald Wayne gets elided from the narrative. So too is the role of MacKenzie Scott in the genesis of Amazon: She left her job at a hedge fund in New York, ditching her affluent life on the Upper West Side for a risky start-up in Silicon Valley, where she upkept the nascent company’s accounting and negotiated numerous contracts, according to Brad Stone’s The Everything Store: Jeff Bezos and the Age of Amazon. Yet the media still mostly frames the success of Amazon solely around Bezos, as if he had operated alone, perpetuating the myth of success without social support.

Einstein—perhaps the prototypical lone genius—made his mathematical breakthroughs only after spending years working in a Swiss patent office where he pored over other people’s inventions, ostensibly sparking his own ideas. The Nobel Prize-winning American chemist Martin Chalfie, Ph.D., recently gave speeches across Canada for the Nobel Prize Inspiration Initiative about the same phenomenon. He told audiences of young scientists how he was initially unsure of becoming a scientist because he wasn’t able to do all of his experiments by himself. Having been told his whole life that the work of the scientist was solitary, he figured he wasn’t cut out for it. “I felt that I had to do everything on my own, because asking for help was a sign that I was not intelligent enough,” he said. “I now see how destructive this attitude was, but then I assumed that this was what I had to do.”

Recent studies show that the most creative people are those who are “open to experience,” able to bind together disparate ideas into new ones (termed “conceptual integration”). Locking yourself up in a lab alone would be one of the worst ways to have a creative breakthrough—far better to get other perspectives, to be out in the world, to create with others.